Table of Contents

- 17.1 Configuring Replication

- 17.1.1 Binary Log File Position Based Replication Configuration Overview

- 17.1.2 Setting Up Binary Log File Position Based Replication

- 17.1.3 Replication with Global Transaction Identifiers

- 17.1.4 MySQL Multi-Source Replication

- 17.1.5 Changing Replication Modes on Online Servers

- 17.1.6 Replication and Binary Logging Options and Variables

- 17.1.7 Common Replication Administration Tasks

- 17.2 Replication Implementation

- 17.3 Replication Solutions

- 17.3.1 Using Replication for Backups

- 17.3.2 Handling an Unexpected Halt of a Replication Slave

- 17.3.3 Monitoring Row-based Replication

- 17.3.4 Using Replication with Different Master and Slave Storage Engines

- 17.3.5 Using Replication for Scale-Out

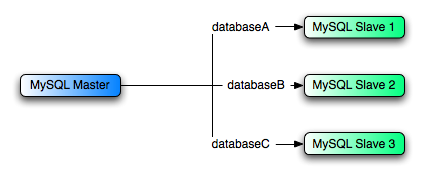

- 17.3.6 Replicating Different Databases to Different Slaves

- 17.3.7 Improving Replication Performance

- 17.3.8 Switching Masters During Failover

- 17.3.9 Setting Up Replication to Use Encrypted Connections

- 17.3.10 Encrypting Binary Log Files and Relay Log Files

- 17.3.11 Semisynchronous Replication

- 17.3.12 Delayed Replication

- 17.4 Replication Notes and Tips

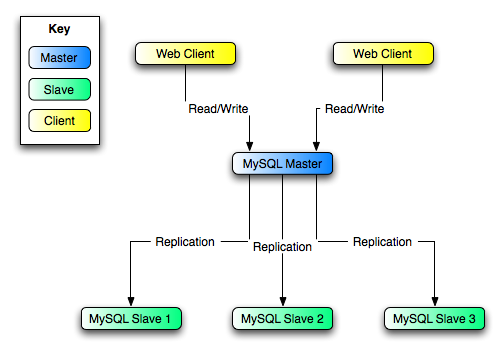

Replication enables data from one MySQL database server (the master) to be copied to one or more MySQL database servers (the slaves). Replication is asynchronous by default; slaves do not need to be connected permanently to receive updates from the master. Depending on the configuration, you can replicate all databases, selected databases, or even selected tables within a database.

Advantages of replication in MySQL include:

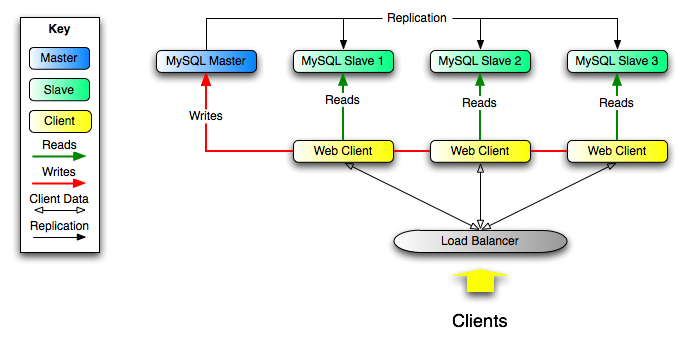

Scale-out solutions - spreading the load among multiple slaves to improve performance. In this environment, all writes and updates must take place on the master server. Reads, however, may take place on one or more slaves. This model can improve the performance of writes (since the master is dedicated to updates), while dramatically increasing read speed across an increasing number of slaves.

Data security - because data is replicated to the slave, and the slave can pause the replication process, it is possible to run backup services on the slave without corrupting the corresponding master data.

Analytics - live data can be created on the master, while the analysis of the information can take place on the slave without affecting the performance of the master.

Long-distance data distribution - you can use replication to create a local copy of data for a remote site to use, without permanent access to the master.

For information on how to use replication in such scenarios, see Section 17.3, “Replication Solutions”.

MySQL 8.0 supports different methods of replication. The traditional method is based on replicating events from the master's binary log, and requires the log files and positions in them to be synchronized between master and slave. The newer method based on global transaction identifiers (GTIDs) is transactional and therefore does not require working with log files or positions within these files, which greatly simplifies many common replication tasks. Replication using GTIDs guarantees consistency between master and slave as long as all transactions committed on the master have also been applied on the slave. For more information about GTIDs and GTID-based replication in MySQL, see Section 17.1.3, “Replication with Global Transaction Identifiers”. For information on using binary log file position based replication, see Section 17.1, “Configuring Replication”.

Replication in MySQL supports different types of synchronization. The original type of synchronization is one-way, asynchronous replication, in which one server acts as the master, while one or more other servers act as slaves. This is in contrast to the synchronous replication which is a characteristic of NDB Cluster (see Chapter 22, MySQL NDB Cluster 8.0). In MySQL 8.0, semisynchronous replication is supported in addition to the built-in asynchronous replication. With semisynchronous replication, a commit performed on the master blocks before returning to the session that performed the transaction until at least one slave acknowledges that it has received and logged the events for the transaction; see Section 17.3.11, “Semisynchronous Replication”. MySQL 8.0 also supports delayed replication such that a slave server deliberately lags behind the master by at least a specified amount of time; see Section 17.3.12, “Delayed Replication”. For scenarios where synchronous replication is required, use NDB Cluster (see Chapter 22, MySQL NDB Cluster 8.0).

There are a number of solutions available for setting up replication between servers, and the best method to use depends on the presence of data and the engine types you are using. For more information on the available options, see Section 17.1.2, “Setting Up Binary Log File Position Based Replication”.

There are two core types of replication format, Statement Based Replication (SBR), which replicates entire SQL statements, and Row Based Replication (RBR), which replicates only the changed rows. You can also use a third variety, Mixed Based Replication (MBR). For more information on the different replication formats, see Section 17.2.1, “Replication Formats”.

Replication is controlled through a number of different options and variables. For more information, see Section 17.1.6, “Replication and Binary Logging Options and Variables”.

You can use replication to solve a number of different problems, including performance, supporting the backup of different databases, and as part of a larger solution to alleviate system failures. For information on how to address these issues, see Section 17.3, “Replication Solutions”.

For notes and tips on how different data types and statements are treated during replication, including details of replication features, version compatibility, upgrades, and potential problems and their resolution, see Section 17.4, “Replication Notes and Tips”. For answers to some questions often asked by those who are new to MySQL Replication, see Section A.13, “MySQL 8.0 FAQ: Replication”.

For detailed information on the implementation of replication, how replication works, the process and contents of the binary log, background threads and the rules used to decide how statements are recorded and replicated, see Section 17.2, “Replication Implementation”.

- 17.1.1 Binary Log File Position Based Replication Configuration Overview

- 17.1.2 Setting Up Binary Log File Position Based Replication

- 17.1.3 Replication with Global Transaction Identifiers

- 17.1.4 MySQL Multi-Source Replication

- 17.1.5 Changing Replication Modes on Online Servers

- 17.1.6 Replication and Binary Logging Options and Variables

- 17.1.7 Common Replication Administration Tasks

This section describes how to configure the different types of replication available in MySQL and includes the setup and configuration required for a replication environment, including step-by-step instructions for creating a new replication environment. The major components of this section are:

For a guide to setting up two or more servers for replication using binary log file positions, Section 17.1.2, “Setting Up Binary Log File Position Based Replication”, deals with the configuration of the servers and provides methods for copying data between the master and slaves.

For a guide to setting up two or more servers for replication using GTID transactions, Section 17.1.3, “Replication with Global Transaction Identifiers”, deals with the configuration of the servers.

Events in the binary log are recorded using a number of formats. These are referred to as statement-based replication (SBR) or row-based replication (RBR). A third type, mixed-format replication (MIXED), uses SBR or RBR replication automatically to take advantage of the benefits of both SBR and RBR formats when appropriate. The different formats are discussed in Section 17.2.1, “Replication Formats”.

Detailed information on the different configuration options and variables that apply to replication is provided in Section 17.1.6, “Replication and Binary Logging Options and Variables”.

Once started, the replication process should require little administration or monitoring. However, for advice on common tasks that you may want to execute, see Section 17.1.7, “Common Replication Administration Tasks”.

This section describes replication between MySQL servers based on the binary log file position method, where the MySQL instance operating as the master (the source of the database changes) writes updates and changes as “events” to the binary log. The information in the binary log is stored in different logging formats according to the database changes being recorded. Slaves are configured to read the binary log from the master and to execute the events in the binary log on the slave's local database.

Each slave receives a copy of the entire contents of the binary log. It is the responsibility of the slave to decide which statements in the binary log should be executed. Unless you specify otherwise, all events in the master binary log are executed on the slave. If required, you can configure the slave to process only events that apply to particular databases or tables.

You cannot configure the master to log only certain events.

Each slave keeps a record of the binary log coordinates: the file name and position within the file that it has read and processed from the master. This means that multiple slaves can be connected to the master and executing different parts of the same binary log. Because the slaves control this process, individual slaves can be connected and disconnected from the server without affecting the master's operation. Also, because each slave records the current position within the binary log, it is possible for slaves to be disconnected, reconnect and then resume processing.

The master and each slave must be configured with a unique ID

(using the server-id option). In

addition, each slave must be configured with information about the

master host name, log file name, and position within that file.

These details can be controlled from within a MySQL session using

the CHANGE MASTER TO statement on

the slave. The details are stored within the slave's master

info repository (see Section 17.2.4, “Replication Relay and Status Logs”).

- 17.1.2.1 Setting the Replication Master Configuration

- 17.1.2.2 Setting the Replication Slave Configuration

- 17.1.2.3 Creating a User for Replication

- 17.1.2.4 Obtaining the Replication Master Binary Log Coordinates

- 17.1.2.5 Choosing a Method for Data Snapshots

- 17.1.2.6 Setting Up Replication Slaves

- 17.1.2.7 Setting the Master Configuration on the Slave

- 17.1.2.8 Adding Slaves to a Replication Environment

This section describes how to set up a MySQL server to use binary log file position based replication. There are a number of different methods for setting up replication, and the exact method to use depends on how you are setting up replication, and whether you already have data within your master database.

There are some generic tasks that are common to all setups:

On the master, you must ensure that binary logging is enabled, and configure a unique server ID. This might require a server restart. See Section 17.1.2.1, “Setting the Replication Master Configuration”.

On each slave that you want to connect to the master, you must configure a unique server ID. This might require a server restart. See Section 17.1.2.2, “Setting the Replication Slave Configuration”.

Optionally, create a separate user for your slaves to use during authentication with the master when reading the binary log for replication. See Section 17.1.2.3, “Creating a User for Replication”.

Before creating a data snapshot or starting the replication process, on the master you should record the current position in the binary log. You need this information when configuring the slave so that the slave knows where within the binary log to start executing events. See Section 17.1.2.4, “Obtaining the Replication Master Binary Log Coordinates”.

If you already have data on the master and want to use it to synchronize the slave, you need to create a data snapshot to copy the data to the slave. The storage engine you are using has an impact on how you create the snapshot. When you are using

MyISAM, you must stop processing statements on the master to obtain a read-lock, then obtain its current binary log coordinates and dump its data, before permitting the master to continue executing statements. If you do not stop the execution of statements, the data dump and the master status information will not match, resulting in inconsistent or corrupted databases on the slaves. For more information on replicating aMyISAMmaster, see Section 17.1.2.4, “Obtaining the Replication Master Binary Log Coordinates”. If you are usingInnoDB, you do not need a read-lock and a transaction that is long enough to transfer the data snapshot is sufficient. For more information, see Section 15.18, “InnoDB and MySQL Replication”.Configure the slave with settings for connecting to the master, such as the host name, login credentials, and binary log file name and position. See Section 17.1.2.7, “Setting the Master Configuration on the Slave”.

Certain steps within the setup process require the

SUPER privilege. If you do not

have this privilege, it might not be possible to enable

replication.

After configuring the basic options, select your scenario:

To set up replication for a fresh installation of a master and slaves that contain no data, see Section 17.1.2.6.1, “Setting Up Replication with New Master and Slaves”.

To set up replication of a new master using the data from an existing MySQL server, see Section 17.1.2.6.2, “Setting Up Replication with Existing Data”.

To add replication slaves to an existing replication environment, see Section 17.1.2.8, “Adding Slaves to a Replication Environment”.

Before administering MySQL replication servers, read this entire chapter and try all statements mentioned in Section 13.4.1, “SQL Statements for Controlling Master Servers”, and Section 13.4.2, “SQL Statements for Controlling Slave Servers”. Also familiarize yourself with the replication startup options described in Section 17.1.6, “Replication and Binary Logging Options and Variables”.

To configure a master to use binary log file position based replication, you must ensure that binary logging is enabled, and establish a unique server ID. If this has not already been done, a server restart is required.

Binary logging is required on the master because the binary log

is the basis for replicating changes from the master to its

slaves. Binary logging is enabled by default (the

log_bin system variable is set

to ON). The --log-bin option

tells the server what base name to use for binary log files. It

is recommended that you specify this option to give the binary

log files a non-default base name, so that if the host name

changes, you can easily continue to use the same binary log file

names (see Section B.6.7, “Known Issues in MySQL”).

Each server within a replication topology must be configured

with a unique server ID, which you can specify using the

--server-id option. This server

ID is used to identify individual servers within the replication

topology, and must be a positive integer between 1 and

(232)−1. If you set a server ID

of 0 on a master, it refuses any connections from slaves, and if

you set a server ID of 0 on a slave, it refuses to connect to a

master. Other than that, how you organize and select the numbers

is your choice, so long as each server ID is different from

every other server ID in use by any other server in the

replication topology. The

server_id system variable is

set to 1 by default. A server can be started with this default

server ID, but an informational message is issued if you did not

specify a server ID explicitly.

The following options also have an impact on the replication master:

For the greatest possible durability and consistency in a replication setup using

InnoDBwith transactions, you should useinnodb_flush_log_at_trx_commit=1andsync_binlog=1in the replication master'smy.cnffile.Ensure that the

skip-networkingoption is not enabled on the replication master. If networking has been disabled, the slave cannot communicate with the master and replication fails.

Each replication slave must have a unique server ID. If this has not already been done, this part of slave setup requires a server restart.

If the slave server ID is not already set, or the current value

conflicts with the value that you have chosen for the master

server, shut down the slave server and edit the

[mysqld] section of the configuration file to

specify a unique server ID. For example:

[mysqld] server-id=2

After making the changes, restart the server.

If you are setting up multiple slaves, each one must have a

unique nonzero server-id value

that differs from that of the master and from any of the other

slaves.

Binary logging is enabled by default on all servers. A slave is not required to have binary logging enabled for replication to take place. However, binary logging on a slave means that the slave's binary log can be used for data backups and crash recovery.

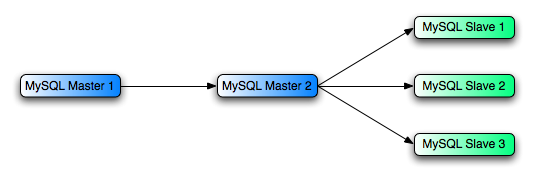

Slaves that have binary logging enabled can also be used as part of a more complex replication topology. For example, you might want to set up replication servers using this chained arrangement:

A -> B -> C

Here, A serves as the master for the slave

B, and B serves as the

master for the slave C. For this to work,

B must be both a master

and a slave. Updates received from

A must be logged by B to

its binary log, in order to be passed on to

C. In addition to binary logging, this

replication topology requires the

--log-slave-updates option to be

enabled. With this option, the slave writes updates that are

received from a master server and performed by the slave's SQL

thread to the slave's own binary log. The

--log-slave-updates option is

enabled by default.

If you need to disable binary logging or slave update logging on

a slave server, you can do this by specifying the

--skip-log-bin

and

--skip-log-slave-updates

options for the slave.

Each slave connects to the master using a MySQL user name and

password, so there must be a user account on the master that the

slave can use to connect. The user name is specified by the

MASTER_USER option on the CHANGE

MASTER TO command when you set up a replication slave.

Any account can be used for this operation, providing it has

been granted the REPLICATION

SLAVE privilege. You can choose to create a different

account for each slave, or connect to the master using the same

account for each slave.

Although you do not have to create an account specifically for

replication, you should be aware that the replication user name

and password are stored in plain text in the master info

repository table mysql.slave_master_info (see

Section 17.2.4.2, “Slave Status Logs”). Therefore, you may want to

create a separate account that has privileges only for the

replication process, to minimize the possibility of compromise

to other accounts.

To create a new account, use CREATE

USER. To grant this account the privileges required

for replication, use the GRANT

statement. If you create an account solely for the purposes of

replication, that account needs only the

REPLICATION SLAVE

privilege. For example, to set up a new user,

repl, that can connect for replication from

any host within the example.com domain, issue

these statements on the master:

mysql>CREATE USER 'repl'@'%.example.com' IDENTIFIED BY 'mysql>password';GRANT REPLICATION SLAVE ON *.* TO 'repl'@'%.example.com';

See Section 13.7.1, “Account Management Statements”, for more information on statements for manipulation of user accounts.

To connect to the replication master using a user account that

authenticates with the

caching_sha2_password plugin, you must

either set up a secure connection as described in

Section 17.3.9, “Setting Up Replication to Use Encrypted Connections”,

or enable the unencrypted connection to support password

exchange using an RSA key pair. The

caching_sha2_password authentication plugin

is the default for new users created from MySQL 8.0 (for

details, see

Section 6.5.1.3, “Caching SHA-2 Pluggable Authentication”).

If the user account that you create or use for replication (as

specified by the MASTER_USER option) uses

this authentication plugin, and you are not using a secure

connection, you must enable RSA key pair-based password

exchange for a successful connection.

To configure the slave to start the replication process at the correct point, you need to note the master's current coordinates within its binary log.

This procedure uses FLUSH TABLES WITH

READ LOCK, which blocks

COMMIT operations for

InnoDB tables.

If you are planning to shut down the master to create a data snapshot, you can optionally skip this procedure and instead store a copy of the binary log index file along with the data snapshot. In that situation, the master creates a new binary log file on restart. The master binary log coordinates where the slave must start the replication process are therefore the start of that new file, which is the next binary log file on the master following after the files that are listed in the copied binary log index file.

To obtain the master binary log coordinates, follow these steps:

Start a session on the master by connecting to it with the command-line client, and flush all tables and block write statements by executing the

FLUSH TABLES WITH READ LOCKstatement:mysql>

FLUSH TABLES WITH READ LOCK;WarningLeave the client from which you issued the

FLUSH TABLESstatement running so that the read lock remains in effect. If you exit the client, the lock is released.In a different session on the master, use the

SHOW MASTER STATUSstatement to determine the current binary log file name and position:mysql >

SHOW MASTER STATUS;+------------------+----------+--------------+------------------+ | File | Position | Binlog_Do_DB | Binlog_Ignore_DB | +------------------+----------+--------------+------------------+ | mysql-bin.000003 | 73 | test | manual,mysql | +------------------+----------+--------------+------------------+The

Filecolumn shows the name of the log file and thePositioncolumn shows the position within the file. In this example, the binary log file ismysql-bin.000003and the position is 73. Record these values. You need them later when you are setting up the slave. They represent the replication coordinates at which the slave should begin processing new updates from the master.If the master has been running previously with binary logging disabled, the log file name and position values displayed by

SHOW MASTER STATUSor mysqldump --master-data will be empty. In that case, the values that you need to use later when specifying the slave's log file and position are the empty string ('') and4.

You now have the information you need to enable the slave to start reading from the binary log in the correct place to start replication.

The next step depends on whether you have existing data on the master. Choose one of the following options:

If you have existing data that needs be to synchronized with the slave before you start replication, leave the client running so that the lock remains in place. This prevents any further changes being made, so that the data copied to the slave is in synchrony with the master. Proceed to Section 17.1.2.5, “Choosing a Method for Data Snapshots”.

If you are setting up a new master and slave replication group, you can exit the first session to release the read lock. See Section 17.1.2.6.1, “Setting Up Replication with New Master and Slaves” for how to proceed.

If the master database contains existing data it is necessary to copy this data to each slave. There are different ways to dump the data from the master database. The following sections describe possible options.

To select the appropriate method of dumping the database, choose between these options:

Use the mysqldump tool to create a dump of all the databases you want to replicate. This is the recommended method, especially when using

InnoDB.If your database is stored in binary portable files, you can copy the raw data files to a slave. This can be more efficient than using mysqldump and importing the file on each slave, because it skips the overhead of updating indexes as the

INSERTstatements are replayed. With storage engines such asInnoDBthis is not recommended.

To create a snapshot of the data in an existing master database, use the mysqldump tool. Once the data dump has been completed, import this data into the slave before starting the replication process.

The following example dumps all databases to a file named

dbdump.db, and includes the

--master-data option which

automatically appends the CHANGE MASTER

TO statement required on the slave to start the

replication process:

shell> mysqldump --all-databases --master-data > dbdump.db

If you do not use

--master-data, then it is

necessary to lock all tables in a separate session manually.

See Section 17.1.2.4, “Obtaining the Replication Master Binary Log Coordinates”.

It is possible to exclude certain databases from the dump

using the mysqldump tool. If you want to

choose which databases to include in the dump, do not use

--all-databases. Choose one

of these options:

Exclude all the tables in the database using

--ignore-tableoption.Name only those databases which you want dumped using the

--databasesoption.

For more information, see Section 4.5.4, “mysqldump — A Database Backup Program”.

To import the data, either copy the dump file to the slave, or access the file from the master when connecting remotely to the slave.

This section describes how to create a data snapshot using the raw files which make up the database. Employing this method with a table using a storage engine that has complex caching or logging algorithms requires extra steps to produce a perfect “point in time” snapshot: the initial copy command could leave out cache information and logging updates, even if you have acquired a global read lock. How the storage engine responds to this depends on its crash recovery abilities.

If you use InnoDB tables, you can

use the mysqlbackup command from the MySQL

Enterprise Backup component to produce a consistent snapshot.

This command records the log name and offset corresponding to

the snapshot to be used on the slave. MySQL Enterprise Backup

is a commercial product that is included as part of a MySQL

Enterprise subscription. See

Section 30.2, “MySQL Enterprise Backup Overview” for detailed

information.

This method also does not work reliably if the master and

slave have different values for

ft_stopword_file,

ft_min_word_len, or

ft_max_word_len and you are

copying tables having full-text indexes.

Assuming the above exceptions do not apply to your database,

use the cold backup

technique to obtain a reliable binary snapshot of

InnoDB tables: do a

slow shutdown of the

MySQL Server, then copy the data files manually.

To create a raw data snapshot of

MyISAM tables when your MySQL

data files exist on a single file system, you can use standard

file copy tools such as cp or

copy, a remote copy tool such as

scp or rsync, an

archiving tool such as zip or

tar, or a file system snapshot tool such as

dump. If you are replicating only certain

databases, copy only those files that relate to those tables.

For InnoDB, all tables in all databases are

stored in the system

tablespace files, unless you have the

innodb_file_per_table option

enabled.

The following files are not required for replication:

Files relating to the

mysqldatabase.The master info repository file

master.info, if used; the use of this file is now deprecated (see Section 17.2.4, “Replication Relay and Status Logs”).The master's binary log files, with the exception of the binary log index file if you are going to use this to locate the master binary log coordinates for the slave.

Any relay log files.

Depending on whether you are using InnoDB

tables or not, choose one of the following:

If you are using InnoDB tables,

and also to get the most consistent results with a raw data

snapshot, shut down the master server during the process, as

follows:

Acquire a read lock and get the master's status. See Section 17.1.2.4, “Obtaining the Replication Master Binary Log Coordinates”.

In a separate session, shut down the master server:

shell>

mysqladmin shutdownMake a copy of the MySQL data files. The following examples show common ways to do this. You need to choose only one of them:

shell>

tar cfshell>/tmp/db.tar./datazip -rshell>/tmp/db.zip./datarsync --recursive./data/tmp/dbdataRestart the master server.

If you are not using InnoDB

tables, you can get a snapshot of the system from a master

without shutting down the server as described in the following

steps:

Acquire a read lock and get the master's status. See Section 17.1.2.4, “Obtaining the Replication Master Binary Log Coordinates”.

Make a copy of the MySQL data files. The following examples show common ways to do this. You need to choose only one of them:

shell>

tar cfshell>/tmp/db.tar./datazip -rshell>/tmp/db.zip./datarsync --recursive./data/tmp/dbdataIn the client where you acquired the read lock, release the lock:

mysql>

UNLOCK TABLES;

Once you have created the archive or copy of the database, copy the files to each slave before starting the slave replication process.

The following sections describe how to set up slaves. Before you proceed, ensure that you have:

Configured the MySQL master with the necessary configuration properties. See Section 17.1.2.1, “Setting the Replication Master Configuration”.

Obtained the master status information, or a copy of the master's binary log index file made during a shutdown for the data snapshot. See Section 17.1.2.4, “Obtaining the Replication Master Binary Log Coordinates”.

On the master, released the read lock:

mysql>

UNLOCK TABLES;On the slave, edited the MySQL configuration. See Section 17.1.2.2, “Setting the Replication Slave Configuration”.

The next steps depend on whether you have existing data to import to the slave or not. See Section 17.1.2.5, “Choosing a Method for Data Snapshots” for more information. Choose one of the following:

If you do not have a snapshot of a database to import, see Section 17.1.2.6.1, “Setting Up Replication with New Master and Slaves”.

If you have a snapshot of a database to import, see Section 17.1.2.6.2, “Setting Up Replication with Existing Data”.

When there is no snapshot of a previous database to import, configure the slave to start the replication from the new master.

To set up replication between a master and a new slave:

Start up the MySQL slave.

Execute a

CHANGE MASTER TOstatement to set the master replication server configuration. See Section 17.1.2.7, “Setting the Master Configuration on the Slave”.

Perform these slave setup steps on each slave.

This method can also be used if you are setting up new servers but have an existing dump of the databases from a different server that you want to load into your replication configuration. By loading the data into a new master, the data is automatically replicated to the slaves.

If you are setting up a new replication environment using the data from a different existing database server to create a new master, run the dump file generated from that server on the new master. The database updates are automatically propagated to the slaves:

shell> mysql -h master < fulldb.dump

When setting up replication with existing data, transfer the snapshot from the master to the slave before starting replication. The process for importing data to the slave depends on how you created the snapshot of data on the master.

Choose one of the following:

If you used mysqldump:

Start the slave, using the

--skip-slave-startoption so that replication does not start.Import the dump file:

shell>

mysql < fulldb.dump

If you created a snapshot using the raw data files:

Extract the data files into your slave data directory. For example:

shell>

tar xvf dbdump.tarYou may need to set permissions and ownership on the files so that the slave server can access and modify them.

Start the slave, using the

--skip-slave-startoption so that replication does not start.Configure the slave with the replication coordinates from the master. This tells the slave the binary log file and position within the file where replication needs to start. Also, configure the slave with the login credentials and host name of the master. For more information on the

CHANGE MASTER TOstatement required, see Section 17.1.2.7, “Setting the Master Configuration on the Slave”.Start the slave threads:

mysql>

START SLAVE;

After you have performed this procedure, the slave connects to the master and replicates any updates that have occurred on the master since the snapshot was taken. Error messages are issued to the slave's error log if it is not able to replicate for any reason.

The slave uses information logged in its master info log and

relay log info log to keep track of how much of the

master's binary log it has processed. From MySQL 8.0, by

default, the repositories for these slave status logs are

tables named slave_master_info and

slave_relay_log_info in the

mysql database. The alternative settings

--master-info-repository=FILE and

--relay-log-info-repository=FILE, where the

repositories are files named master.info

and relay-log.info in the data directory,

are now deprecated and will be removed in a future release.

Do not remove or edit these tables (or

files, if used) unless you know exactly what you are doing and

fully understand the implications. Even in that case, it is

preferred that you use the CHANGE MASTER

TO statement to change replication parameters. The

slave uses the values specified in the statement to update the

slave status logs automatically. See

Section 17.2.4, “Replication Relay and Status Logs”, for more information.

The contents of the master info log override some of the

server options specified on the command line or in

my.cnf. See

Section 17.1.6, “Replication and Binary Logging Options and Variables”, for more details.

A single snapshot of the master suffices for multiple slaves. To set up additional slaves, use the same master snapshot and follow the slave portion of the procedure just described.

To set up the slave to communicate with the master for replication, configure the slave with the necessary connection information. To do this, execute the following statement on the slave, replacing the option values with the actual values relevant to your system:

mysql>CHANGE MASTER TO->MASTER_HOST='->master_host_name',MASTER_USER='->replication_user_name',MASTER_PASSWORD='->replication_password',MASTER_LOG_FILE='->recorded_log_file_name',MASTER_LOG_POS=recorded_log_position;

Replication cannot use Unix socket files. You must be able to connect to the master MySQL server using TCP/IP.

The CHANGE MASTER TO statement

has other options as well. For example, it is possible to set up

secure replication using SSL. For a full list of options, and

information about the maximum permissible length for the

string-valued options, see Section 13.4.2.1, “CHANGE MASTER TO Syntax”.

As noted in

Section 17.1.2.3, “Creating a User for Replication”, if you

are not using a secure connection and the user account named

in the MASTER_USER option authenticates

with the caching_sha2_password plugin (the

default from MySQL 8.0), you must specify the

MASTER_PUBLIC_KEY_PATH or

GET_MASTER_PUBLIC_KEY option in the

CHANGE MASTER TO statement to enable RSA

key pair-based password exchange.

You can add another slave to an existing replication configuration without stopping the master. To do this, you can set up the new slave by copying the data directory of an existing slave, and giving the new slave a different server ID (which is user-specified) and server UUID (which is generated at startup).

To duplicate an existing slave:

Stop the existing slave and record the slave status information, particularly the master binary log file and relay log file positions. You can view the slave status either in the Performance Schema replication tables (see Section 26.12.11, “Performance Schema Replication Tables”), or by issuing

SHOW SLAVE STATUSas follows:mysql>

STOP SLAVE;mysql>SHOW SLAVE STATUS\GShut down the existing slave:

shell>

mysqladmin shutdownCopy the data directory from the existing slave to the new slave, including the log files and relay log files. You can do this by creating an archive using tar or

WinZip, or by performing a direct copy using a tool such as cp or rsync.ImportantBefore copying, verify that all the files relating to the existing slave actually are stored in the data directory. For example, the

InnoDBsystem tablespace, undo tablespace, and redo log might be stored in an alternative location.InnoDBtablespace files and file-per-table tablespaces might have been created in other directories. The binary logs and relay logs for the slave might be in their own directories outside the data directory. Check through the system variables that are set for the existing slave and look for any alternative paths that have been specified. If you find any, copy these directories over as well.During copying, if files have been used for the master info and relay log info repositories (see Section 17.2.4, “Replication Relay and Status Logs”), ensure that you also copy these files from the existing slave to the new slave. If tables have been used for the repositories, which is the default from MySQL 8.0, the tables are in the data directory.

After copying, delete the

auto.cnffile from the copy of the data directory on the new slave, so that the new slave is started with a different generated server UUID. The server UUID must be unique.

A common problem that is encountered when adding new replication slaves is that the new slave fails with a series of warning and error messages like these:

071118 16:44:10 [Warning] Neither --relay-log nor --relay-log-index were used; so replication may break when this MySQL server acts as a slave and has his hostname changed!! Please use '--relay-log=

new_slave_hostname-relay-bin' to avoid this problem. 071118 16:44:10 [ERROR] Failed to open the relay log './old_slave_hostname-relay-bin.003525' (relay_log_pos 22940879) 071118 16:44:10 [ERROR] Could not find target log during relay log initialization 071118 16:44:10 [ERROR] Failed to initialize the master info structureThis situation can occur if the

--relay-logoption is not specified, as the relay log files contain the host name as part of their file names. This is also true of the relay log index file if the--relay-log-indexoption is not used. See Section 17.1.6, “Replication and Binary Logging Options and Variables”, for more information about these options.To avoid this problem, use the same value for

--relay-logon the new slave that was used on the existing slave. If this option was not set explicitly on the existing slave, useexisting_slave_hostname-relay-bin--relay-log-indexoption on the new slave to match what was used on the existing slave. If this option was not set explicitly on the existing slave, useexisting_slave_hostname-relay-bin.indexIf you have not already done so, issue

STOP SLAVEon the new slave.If you have already started the existing slave again, issue

STOP SLAVEon the existing slave as well.Copy the contents of the existing slave's relay log index file into the new slave's relay log index file, making sure to overwrite any content already in the file.

Proceed with the remaining steps in this section.

When copying is complete, restart the existing slave.

On the new slave, edit the configuration and give the new slave a unique server ID (using the

server-idoption) that is not used by the master or any of the existing slaves.Start the new slave server, specifying the

--skip-slave-startoption so that replication does not start yet. Use the Performance Schema replication tables or issueSHOW SLAVE STATUSto confirm that the new slave has the correct settings when compared with the existing slave. Also display the server ID and server UUID and verify that these are correct and unique for the new slave.Start the slave threads by issuing a

START SLAVEstatement:mysql>

START SLAVE;The new slave now uses the information in its master info repository to start the replication process.

This section explains transaction-based replication using global transaction identifiers (GTIDs). When using GTIDs, each transaction can be identified and tracked as it is committed on the originating server and applied by any slaves; this means that it is not necessary when using GTIDs to refer to log files or positions within those files when starting a new slave or failing over to a new master, which greatly simplifies these tasks. Because GTID-based replication is completely transaction-based, it is simple to determine whether masters and slaves are consistent; as long as all transactions committed on a master are also committed on a slave, consistency between the two is guaranteed. You can use either statement-based or row-based replication with GTIDs (see Section 17.2.1, “Replication Formats”); however, for best results, we recommend that you use the row-based format.

GTIDs are always preserved between master and slave. This means that you can always determine the source for any transaction applied on any slave by examining its binary log. In addition, once a transaction with a given GTID is committed on a given server, any subsequent transaction having the same GTID is ignored by that server. Thus, a transaction committed on the master can be applied no more than once on the slave, which helps to guarantee consistency.

This section discusses the following topics:

How GTIDs are defined and created, and how they are represented in a MySQL server (see Section 17.1.3.1, “GTID Format and Storage”).

The life cycle of a GTID (see Section 17.1.3.2, “GTID Life Cycle”).

The auto-positioning function for synchronizing a slave and master that use GTIDs (see Section 17.1.3.3, “GTID Auto-Positioning”).

A general procedure for setting up and starting GTID-based replication (see Section 17.1.3.4, “Setting Up Replication Using GTIDs”).

Suggested methods for provisioning new replication servers when using GTIDs (see Section 17.1.3.5, “Using GTIDs for Failover and Scaleout”).

Restrictions and limitations that you should be aware of when using GTID-based replication (see Section 17.1.3.6, “Restrictions on Replication with GTIDs”).

Stored functions that you can use to work with GTIDs (see Section 17.1.3.7, “Stored Function Examples to Manipulate GTIDs”).

For information about MySQL Server options and variables relating to GTID-based replication, see Section 17.1.6.5, “Global Transaction ID Options and Variables”. See also Section 12.18, “Functions Used with Global Transaction Identifiers (GTIDs)”, which describes SQL functions supported by MySQL 8.0 for use with GTIDs.

A global transaction identifier (GTID) is a unique identifier created and associated with each transaction committed on the server of origin (the master). This identifier is unique not only to the server on which it originated, but is unique across all servers in a given replication topology.

GTID assignment distinguishes between client transactions, which are committed on the master, and replicated transactions, which are reproduced on a slave. When a client transaction is committed on the master, it is assigned a new GTID, provided that the transaction was written to the binary log. Client transactions are guaranteed to have monotonically increasing GTIDs without gaps between the generated numbers. If a client transaction is not written to the binary log (for example, because the transaction was filtered out, or the transaction was read-only), it is not assigned a GTID on the server of origin.

Replicated transactions retain the same GTID that was assigned to

the transaction on the server of origin. The GTID is present

before the replicated transaction begins to execute, and is

persisted even if the replicated transaction is not written to the

binary log on the slave, or is filtered out on the slave. The

MySQL system table mysql.gtid_executed is used

to preserve the assigned GTIDs of all the transactions applied on

a MySQL server, except those that are stored in a currently active

binary log file.

The auto-skip function for GTIDs means that a transaction committed on the master can be applied no more than once on the slave, which helps to guarantee consistency. Once a transaction with a given GTID has been committed on a given server, any attempt to execute a subsequent transaction with the same GTID is ignored by that server. No error is raised, and no statement in the transaction is executed.

If a transaction with a given GTID has started to execute on a server, but has not yet committed or rolled back, any attempt to start a concurrent transaction on the server with the same GTID will block. The server neither begins to execute the concurrent transaction nor returns control to the client. Once the first attempt at the transaction commits or rolls back, concurrent sessions that were blocking on the same GTID may proceed. If the first attempt rolled back, one concurrent session proceeds to attempt the transaction, and any other concurrent sessions that were blocking on the same GTID remain blocked. If the first attempt committed, all the concurrent sessions stop being blocked, and auto-skip all the statements of the transaction.

A GTID is represented as a pair of coordinates, separated by a

colon character (:), as shown here:

GTID =source_id:transaction_id

The source_id identifies the

originating server. Normally, the master's

server_uuid is used for this

purpose. The transaction_id is a

sequence number determined by the order in which the transaction

was committed on the master; for example, the first transaction to

be committed has 1 as its

transaction_id, and the tenth

transaction to be committed on the same originating server is

assigned a transaction_id of

10. It is not possible for a transaction to

have 0 as a sequence number in a GTID. For

example, the twenty-third transaction to be committed originally

on the server with the UUID

3E11FA47-71CA-11E1-9E33-C80AA9429562 has this

GTID:

3E11FA47-71CA-11E1-9E33-C80AA9429562:23

The GTID for a transaction is shown in the output from

mysqlbinlog, and it is used to identify an

individual transaction in the Performance Schema replication

status tables, for example,

replication_applier_status_by_worker.

The value stored by the gtid_next

system variable (@@GLOBAL.gtid_next) is a

single GTID.

A GTID set is a set comprising one or more single GTIDs or

ranges of GTIDs. GTID sets are used in a MySQL server in several

ways. For example, the values stored by the

gtid_executed and

gtid_purged system variables

are GTID sets. The START SLAVE

clauses UNTIL SQL_BEFORE_GTIDS and

UNTIL SQL_AFTER_GTIDS can be used to make a

slave process transactions only up to the first GTID in a GTID

set, or stop after the last GTID in a GTID set. The built-in

functions GTID_SUBSET() and

GTID_SUBTRACT() require GTID sets

as input.

A range of GTIDs originating from the same server can be collapsed into a single expression, as shown here:

3E11FA47-71CA-11E1-9E33-C80AA9429562:1-5

The above example represents the first through fifth

transactions originating on the MySQL server whose

server_uuid is

3E11FA47-71CA-11E1-9E33-C80AA9429562.

Multiple single GTIDs or ranges of GTIDs originating from the

same server can also be included in a single expression, with

the GTIDs or ranges separated by colons, as in the following

example:

3E11FA47-71CA-11E1-9E33-C80AA9429562:1-3:11:47-49

A GTID set can include any combination of single GTIDs and

ranges of GTIDs, and it can include GTIDs originating from

different servers. This example shows the GTID set stored in the

gtid_executed system variable

(@@GLOBAL.gtid_executed) of a slave that has

applied transactions from more than one master:

2174B383-5441-11E8-B90A-C80AA9429562:1-3, 24DA167-0C0C-11E8-8442-00059A3C7B00:1-19

When GTID sets are returned from server variables, UUIDs are in alphabetical order, and numeric intervals are merged and in ascending order.

The syntax for a GTID set is as follows:

gtid_set:uuid_set[,uuid_set] ... | ''uuid_set:uuid:interval[:interval]...uuid:hhhhhhhh-hhhh-hhhh-hhhh-hhhhhhhhhhhhh: [0-9|A-F]interval:n[-n] (n>= 1)

GTIDs are stored in a table named

gtid_executed, in the

mysql database. A row in this table contains,

for each GTID or set of GTIDs that it represents, the UUID of

the originating server, and the starting and ending transaction

IDs of the set; for a row referencing only a single GTID, these

last two values are the same.

The mysql.gtid_executed table is created (if

it does not already exist) when MySQL Server is installed or

upgraded, using a CREATE TABLE

statement similar to that shown here:

CREATE TABLE gtid_executed (

source_uuid CHAR(36) NOT NULL,

interval_start BIGINT(20) NOT NULL,

interval_end BIGINT(20) NOT NULL,

PRIMARY KEY (source_uuid, interval_start)

)

As with other MySQL system tables, do not attempt to create or modify this table yourself.

The mysql.gtid_executed table is provided for

internal use by the MySQL server. It enables a slave to use

GTIDs when binary logging is disabled on the slave, and it

enables retention of the GTID state when the binary logs have

been lost. The mysql.gtid_executed table is

reset by RESET MASTER.

GTIDs are stored in the mysql.gtid_executed

table only when gtid_mode is

ON or ON_PERMISSIVE. The

point at which GTIDs are stored depends on whether binary

logging is enabled or disabled:

If binary logging is disabled (

log_binisOFF), or iflog_slave_updatesis disabled, the server stores the GTID belonging to each transaction together with the transaction in the table. In addition, the table is compressed periodically at a user-configurable rate; see mysql.gtid_executed Table Compression, for more information. This situation can only apply on a replication slave where binary logging or slave update logging is disabled. It does not apply on a replication master, because on a master, binary logging must be enabled for replication to take place.If binary logging is enabled (

log_binisON), whenever the binary log is rotated or the server is shut down, the server writes GTIDs for all transactions that were written into the previous binary log into themysql.gtid_executedtable. This situation applies on a replication master, or a replication slave where binary logging is enabled.In the event of the server stopping unexpectedly, the set of GTIDs from the current binary log file is not saved in the

mysql.gtid_executedtable. These GTIDs are added to the table from the binary log file during recovery. The exception to this is if you disable binary logging when the server is restarted (using--skip-log-binor--disable-log-bin). In this situation, the server cannot access the binary log file to recover the GTIDs, so replication cannot be started.When binary logging is enabled, the

mysql.gtid_executedtable does not hold a complete record of the GTIDs for all executed transactions. That information is provided by the global value of thegtid_executedsystem variable. Always use@@GLOBAL.gtid_executed, which is updated after every commit, to represent the GTID state for the MySQL server, and do not query themysql.gtid_executedtable.

Over the course of time, the

mysql.gtid_executed table can become filled

with many rows referring to individual GTIDs that originate on

the same server, and whose transaction IDs make up a range,

similar to what is shown here:

+--------------------------------------+----------------+--------------+ | source_uuid | interval_start | interval_end | |--------------------------------------+----------------+--------------| | 3E11FA47-71CA-11E1-9E33-C80AA9429562 | 37 | 37 | | 3E11FA47-71CA-11E1-9E33-C80AA9429562 | 38 | 38 | | 3E11FA47-71CA-11E1-9E33-C80AA9429562 | 39 | 39 | | 3E11FA47-71CA-11E1-9E33-C80AA9429562 | 40 | 40 | | 3E11FA47-71CA-11E1-9E33-C80AA9429562 | 41 | 41 | | 3E11FA47-71CA-11E1-9E33-C80AA9429562 | 42 | 42 | | 3E11FA47-71CA-11E1-9E33-C80AA9429562 | 43 | 43 | ...

To save space, the MySQL server compresses the

mysql.gtid_executed table periodically by

replacing each such set of rows with a single row that spans the

entire interval of transaction identifiers, like this:

+--------------------------------------+----------------+--------------+ | source_uuid | interval_start | interval_end | |--------------------------------------+----------------+--------------| | 3E11FA47-71CA-11E1-9E33-C80AA9429562 | 37 | 43 | ...

You can control the number of transactions that are allowed to

elapse before the table is compressed, and thus the compression

rate, by setting the

gtid_executed_compression_period

system variable. This variable's default value is 1000,

meaning that by default, compression of the table is performed

after each 1000 transactions. Setting

gtid_executed_compression_period

to 0 prevents the compression from being performed at all, and

you should be prepared for a potentially large increase in the

amount of disk space that may be required by the

gtid_executed table if you do this.

When binary logging is enabled, the value of

gtid_executed_compression_period

is not used and the

mysql.gtid_executed table is compressed on

each binary log rotation.

Compression of the mysql.gtid_executed table

is performed by a dedicated foreground thread named

thread/sql/compress_gtid_table. This thread

is not listed in the output of SHOW

PROCESSLIST, but it can be viewed as a row in the

threads table, as shown here:

mysql> SELECT * FROM performance_schema.threads WHERE NAME LIKE '%gtid%'\G

*************************** 1. row ***************************

THREAD_ID: 26

NAME: thread/sql/compress_gtid_table

TYPE: FOREGROUND

PROCESSLIST_ID: 1

PROCESSLIST_USER: NULL

PROCESSLIST_HOST: NULL

PROCESSLIST_DB: NULL

PROCESSLIST_COMMAND: Daemon

PROCESSLIST_TIME: 1509

PROCESSLIST_STATE: Suspending

PROCESSLIST_INFO: NULL

PARENT_THREAD_ID: 1

ROLE: NULL

INSTRUMENTED: YES

HISTORY: YES

CONNECTION_TYPE: NULL

THREAD_OS_ID: 18677

The thread/sql/compress_gtid_table thread

normally sleeps until

gtid_executed_compression_period

transactions have been executed, then wakes up to perform

compression of the mysql.gtid_executed table

as described previously. It then sleeps until another

gtid_executed_compression_period

transactions have taken place, then wakes up to perform the

compression again, repeating this loop indefinitely. Setting

this value to 0 when binary logging is disabled means that the

thread always sleeps and never wakes up.

The life cycle of a GTID consists of the following steps:

A transaction is executed and committed on the master. This client transaction is assigned a GTID composed of the master's UUID and the smallest nonzero transaction sequence number not yet used on this server. The GTID is written to the master's binary log (immediately preceding the transaction itself in the log). If a client transaction is not written to the binary log (for example, because the transaction was filtered out, or the transaction was read-only), it is not assigned a GTID.

If a GTID was assigned for the transaction, the GTID is persisted atomically at commit time by writing it to the binary log at the beginning of the transaction (as a

Gtid_log_event). Whenever the binary log is rotated or the server is shut down, the server writes GTIDs for all transactions that were written into the previous binary log file into themysql.gtid_executedtable.If a GTID was assigned for the transaction, the GTID is externalized non-atomically (very shortly after the transaction is committed) by adding it to the set of GTIDs in the

gtid_executedsystem variable (@@GLOBAL.gtid_executed). This GTID set contains a representation of the set of all committed GTID transactions, and it is used in replication as a token that represents the server state. With binary logging enabled (as required for the master), the set of GTIDs in thegtid_executedsystem variable is a complete record of the transactions applied, but themysql.gtid_executedtable is not, because the most recent history is still in the current binary log file.After the binary log data is transmitted to the slave and stored in the slave's relay log (using established mechanisms for this process, see Section 17.2, “Replication Implementation”, for details), the slave reads the GTID and sets the value of its

gtid_nextsystem variable as this GTID. This tells the slave that the next transaction must be logged using this GTID. It is important to note that the slave setsgtid_nextin a session context.The slave verifies that no thread has yet taken ownership of the GTID in

gtid_nextin order to process the transaction. By reading and checking the replicated transaction's GTID first, before processing the transaction itself, the slave guarantees not only that no previous transaction having this GTID has been applied on the slave, but also that no other session has already read this GTID but has not yet committed the associated transaction. So if multiple clients attempt to apply the same transaction concurrently, the server resolves this by letting only one of them execute. Thegtid_ownedsystem variable (@@GLOBAL.gtid_owned) for the slave shows each GTID that is currently in use and the ID of the thread that owns it. If the GTID has already been used, no error is raised, and the auto-skip function is used to ignore the transaction.If the GTID has not been used, the slave applies the replicated transaction. Because

gtid_nextis set to the GTID already assigned by the master, the slave does not attempt to generate a new GTID for this transaction, but instead uses the GTID stored ingtid_next.If binary logging is enabled on the slave, the GTID is persisted atomically at commit time by writing it to the binary log at the beginning of the transaction (as a

Gtid_log_event). Whenever the binary log is rotated or the server is shut down, the server writes GTIDs for all transactions that were written into the previous binary log file into themysql.gtid_executedtable.If binary logging is disabled on the slave, the GTID is persisted atomically by writing it directly into the

mysql.gtid_executedtable. MySQL appends a statement to the transaction to insert the GTID into the table. From MySQL 8.0, this operation is atomic for DDL statements as well as for DML statements. In this situation, themysql.gtid_executedtable is a complete record of the transactions applied on the slave.Very shortly after the replicated transaction is committed on the slave, the GTID is externalized non-atomically by adding it to the set of GTIDs in the

gtid_executedsystem variable (@@GLOBAL.gtid_executed) for the slave. As for the master, this GTID set contains a representation of the set of all committed GTID transactions. If binary logging is disabled on the slave, themysql.gtid_executedtable is also a complete record of the transactions applied on the slave. If binary logging is enabled on the slave, meaning that some GTIDs are only recorded in the binary log, the set of GTIDs in thegtid_executedsystem variable is the only complete record.

Client transactions that are completely filtered out on the master

are not assigned a GTID, therefore they are not added to the set

of transactions in the

gtid_executed system variable, or

added to the mysql.gtid_executed table.

However, the GTIDs of replicated transactions that are completely

filtered out on the slave are persisted. If binary logging is

enabled on the slave, the filtered-out transaction is written to

the binary log as a Gtid_log_event followed by

an empty transaction containing only BEGIN and COMMIT statements.

If binary logging is disabled, the GTID of the filtered-out

transaction is written to the

mysql.gtid_executed table. Preserving the GTIDs

for filtered-out transactions ensures that the

mysql.gtid_executed table and the set of GTIDs

in the gtid_executed system

variable can be compressed. It also ensures that the filtered-out

transactions are not retrieved again if the slave reconnects to

the master, as explained in

Section 17.1.3.3, “GTID Auto-Positioning”.

On a multithreaded replication slave (with

slave_parallel_workers > 0 ),

transactions can be applied in parallel, so replicated

transactions can commit out of order (unless

slave_preserve_commit_order=1 is

set). When that happens, the set of GTIDs in the

gtid_executed system

variable will contain multiple GTID ranges with gaps between them.

(On a master or a single-threaded replication slave, there will be

monotonically increasing GTIDs without gaps between the numbers.)

Gaps on multithreaded replication slaves only occur among the most

recently applied transactions, and are filled in as replication

progresses. When replication threads are stopped cleanly using the

STOP SLAVE statement,

ongoing transactions are applied so that the gaps are filled in.

In the event of a shutdown such as a server failure or the use of

the KILL statement to stop

replication threads, the gaps might remain.

It is possible for a client to simulate a replicated transaction

by setting the variable @@SESSION.gtid_next to

a valid GTID (consisting of a UUID and a transaction sequence

number, separated by a colon) before executing the transaction.

This technique is used by mysqlbinlog to

generate a dump of the binary log that the client can replay to

preserve GTIDs. A simulated replicated transaction committed

through a client is completely equivalent to a replicated

transaction committed through a replication thread, and they

cannot be distinguished after the fact.

The set of GTIDs in the

gtid_purged system variable

(@@GLOBAL.gtid_purged) contains the GTIDs of

all the transactions that have been committed on the server, but

do not exist in any binary log file on the server. The following

categories of GTIDs are in this set:

GTIDs of replicated transactions that were committed with binary logging disabled on the slave

GTIDs of transactions that were written to a binary log file that has now been purged

GTIDs that were added explicitly to the set by the statement

SET @@GLOBAL.gtid_purged

The set of GTIDs in the

gtid_purged system variable is

initialized when the server starts. Every binary log file begins

with the event Previous_gtids_log_event,

which contains the set of GTIDs in all previous binary log files

(composed from the GTIDs in the preceding file's

Previous_gtids_log_event, and the GTIDs of

every Gtid_log_event in the file itself). The

contents of Previous_gtids_log_event in the

oldest and most recent binary log files are used to compute the

gtid_purged set at server

startup, as follows:

All GTIDs in Previous_gtids_log_event in the most recent binary log file + All GTIDs of transactions in the most recent binary log file + All GTIDs in the mysql.gtid_executed table - All GTIDs in Previous_gtids_log_event in the oldest binary log file

GTIDs replace the file-offset pairs previously required to determine points for starting, stopping, or resuming the flow of data between master and slave. When GTIDs are in use, all the information that the slave needs for synchronizing with the master is obtained directly from the replication data stream.

To start a slave using GTID-based replication, you do not include

MASTER_LOG_FILE or

MASTER_LOG_POS options in the

CHANGE MASTER TO statement

used to direct the slave to replicate from a given master. These

options specify the name of the log file and the starting position

within the file, but with GTIDs the slave does not need this

nonlocal data. Instead, you need to enable the

MASTER_AUTO_POSITION option. For full

instructions to configure and start masters and slaves using

GTID-based replication, see

Section 17.1.3.4, “Setting Up Replication Using GTIDs”.

The MASTER_AUTO_POSITION option is disabled by

default. If multi-source replication is enabled on the slave, you

need to set the option for each applicable replication channel.

Disabling the MASTER_AUTO_POSITION option again

makes the slave revert to file-based replication, in which case

you must also specify one or both of the

MASTER_LOG_FILE or

MASTER_LOG_POS options.

When a replication slave has GTIDs enabled

(GTID_MODE=ON,

ON_PERMISSIVE, or

OFF_PERMISSIVE ) and the

MASTER_AUTO_POSITION option enabled,

auto-positioning is activated for connection to the master. The

master must have

GTID_MODE=ON set in

order for the connection to succeed. In the initial handshake, the

slave sends a GTID set containing the transactions that it has

already received, committed, or both. This GTID set is equal to

the union of the set of GTIDs in the

gtid_executed system variable

(@@GLOBAL.gtid_executed), and the set of GTIDs

recorded in the Performance Schema

replication_connection_status

table as received transactions (the result of the statement

SELECT RECEIVED_TRANSACTION_SET FROM

PERFORMANCE_SCHEMA.replication_connection_status).

The master responds by sending all transactions recorded in its binary log whose GTID is not included in the GTID set sent by the slave. This exchange ensures that the master only sends the transactions with a GTID that the slave has not already received or committed. If the slave receives transactions from more than one master, as in the case of a diamond topology, the auto-skip function ensures that the transactions are not applied twice.

If any of the transactions that should be sent by the master have

been purged from the master's binary log, or added to the set of

GTIDs in the gtid_purged

system variable by another method, the master sends the error

ER_MASTER_HAS_PURGED_REQUIRED_GTIDS to the

slave, and replication does not start. The GTIDs of the missing

purged transactions are identified and listed in the master's

error log in the warning message

ER_FOUND_MISSING_GTIDS. The slave cannot

recover automatically from this error because parts of the

transaction history that are needed to catch up with the master

have been purged. Attempting to reconnect without the

MASTER_AUTO_POSITION option enabled only

results in the loss of the purged transactions on the slave. The

correct approach to recover from this situation is for the slave

to replicate the missing transactions listed in the

ER_FOUND_MISSING_GTIDS message from another

source, or for the slave to be replaced by a new slave created

from a more recent backup. Consider revising the binary log

expiration period

(binlog_expire_logs_seconds)

on the master to ensure that the situation does not occur again.

If during the exchange of transactions it is found that the slave

has received or committed transactions with the master's UUID in

the GTID, but the master itself does not have a record of them,

the master sends the error

ER_SLAVE_HAS_MORE_GTIDS_THAN_MASTER to the

slave and replication does not start. This situation can occur if

a master that does not have

sync_binlog=1 set experiences a

power failure or operating system crash, and loses committed

transactions that have not yet been synchronized to the binary log

file, but have been received by the slave. The master and slave

can diverge if any clients commit transactions on the master after

it is restarted, which can lead to the situation where the master

and slave are using the same GTID for different transactions. The

correct approach to recover from this situation is to check

manually whether the master and slave have diverged. If the same

GTID is now in use for different transactions, you either need to

perform manual conflict resolution for individual transactions as

required, or remove either the master or the slave from the

replication topology. If the issue is only missing transactions on

the master, you can make the master into a slave instead, allow it

to catch up with the other servers in the replication topology,

and then make it a master again if needed.

This section describes a process for configuring and starting GTID-based replication in MySQL 8.0. This is a “cold start” procedure that assumes either that you are starting the replication master for the first time, or that it is possible to stop it; for information about provisioning replication slaves using GTIDs from a running master, see Section 17.1.3.5, “Using GTIDs for Failover and Scaleout”. For information about changing GTID mode on servers online, see Section 17.1.5, “Changing Replication Modes on Online Servers”.

The key steps in this startup process for the simplest possible GTID replication topology, consisting of one master and one slave, are as follows:

If replication is already running, synchronize both servers by making them read-only.

Stop both servers.

Restart both servers with GTIDs enabled and the correct options configured.

The mysqld options necessary to start the servers as described are discussed in the example that follows later in this section.

Instruct the slave to use the master as the replication data source and to use auto-positioning. The SQL statements needed to accomplish this step are described in the example that follows later in this section.

Take a new backup. Binary logs containing transactions without GTIDs cannot be used on servers where GTIDs are enabled, so backups taken before this point cannot be used with your new configuration.

Start the slave, then disable read-only mode on both servers, so that they can accept updates.

In the following example, two servers are already running as

master and slave, using MySQL's binary log position-based

replication protocol. If you are starting with new servers, see

Section 17.1.2.3, “Creating a User for Replication” for information about

adding a specific user for replication connections and

Section 17.1.2.1, “Setting the Replication Master Configuration” for

information about setting the

server_id variable. The following

examples show how to store mysqld startup

options in server's option file, see

Section 4.2.7, “Using Option Files” for more information. Alternatively

you can use startup options when running

mysqld.

Most of the steps that follow require the use of the MySQL

root account or another MySQL user account that

has the SUPER privilege.

mysqladmin shutdown requires

either the SUPER privilege or the

SHUTDOWN privilege.

Step 1: Synchronize the servers.

This step is only required when working with servers which are

already replicating without using GTIDs. For new servers proceed

to Step 3. Make the servers read-only by setting the

read_only system variable to

ON on each server by issuing the following:

mysql> SET @@GLOBAL.read_only = ON;

Wait for all ongoing transactions to commit or roll back. Then, allow the slave to catch up with the master. It is extremely important that you make sure the slave has processed all updates before continuing.

If you use binary logs for anything other than replication, for example to do point in time backup and restore, wait until you do not need the old binary logs containing transactions without GTIDs. Ideally, wait for the server to purge all binary logs, and wait for any existing backup to expire.

It is important to understand that logs containing transactions without GTIDs cannot be used on servers where GTIDs are enabled. Before proceeding, you must be sure that transactions without GTIDs do not exist anywhere in the topology.

Step 2: Stop both servers.

Stop each server using mysqladmin as shown

here, where username is the user name

for a MySQL user having sufficient privileges to shut down the

server:

shell> mysqladmin -uusername -p shutdown

Then supply this user's password at the prompt.

Step 3: Start both servers with GTIDs enabled.

To enable GTID-based replication, each server must be started

with GTID mode enabled by setting the

gtid_mode variable to

ON, and with the

enforce_gtid_consistency

variable enabled to ensure that only statements which are safe

for GTID-based replication are logged. For example:

gtid_mode=ON enforce-gtid-consistency=true

In addition, you should start slaves with the

--skip-slave-start option before

configuring the slave settings. For more information on GTID

related options and variables, see

Section 17.1.6.5, “Global Transaction ID Options and Variables”.

It is not mandatory to have binary logging enabled in order to use

GTIDs when using the

mysql.gtid_executed Table. Masters

must always have binary logging enabled in order to be able to

replicate. However, slave servers can use GTIDs but without binary

logging. If you need to disable binary logging on a slave server,

you can do this by specifying the

--skip-log-bin

and

--skip-log-slave-updates

options for the slave.

Step 4: Configure the slave to use GTID-based auto-positioning.

Tell the slave to use the master with GTID based transactions as

the replication data source, and to use GTID-based

auto-positioning rather than file-based positioning. Issue a

CHANGE MASTER TO statement on the

slave, including the MASTER_AUTO_POSITION

option in the statement to tell the slave that the master's

transactions are identified by GTIDs.

You may also need to supply appropriate values for the master's host name and port number as well as the user name and password for a replication user account which can be used by the slave to connect to the master; if these have already been set prior to Step 1 and no further changes need to be made, the corresponding options can safely be omitted from the statement shown here.

mysql>CHANGE MASTER TO>MASTER_HOST =>host,MASTER_PORT =>port,MASTER_USER =>user,MASTER_PASSWORD =>password,MASTER_AUTO_POSITION = 1;

Neither the MASTER_LOG_FILE option nor the

MASTER_LOG_POS option may be used with

MASTER_AUTO_POSITION set equal to 1. Attempting

to do so causes the CHANGE MASTER

TO statement to fail with an error.

Step 5: Take a new backup. Existing backups that were made before you enabled GTIDs can no longer be used on these servers now that you have enabled GTIDs. Take a new backup at this point, so that you are not left without a usable backup.

For instance, you can execute FLUSH

LOGS on the server where you are taking backups. Then

either explicitly take a backup or wait for the next iteration of

any periodic backup routine you may have set up.

Step 6: Start the slave and disable read-only mode. Start the slave like this:

mysql> START SLAVE;

The following step is only necessary if you configured a server to be read-only in Step 1. To allow the server to begin accepting updates again, issue the following statement:

mysql> SET @@GLOBAL.read_only = OFF;

GTID-based replication should now be running, and you can begin (or resume) activity on the master as before. Section 17.1.3.5, “Using GTIDs for Failover and Scaleout”, discusses creation of new slaves when using GTIDs.